Project information

- Category: AI Research / Explainable AI

- Status: Current Research (Ongoing)

- Research Area: LLM Interpretability & Safety

LLM Interpretability: Refusal Mechanisms in Privacy-Sensitive Contexts

This cutting-edge research project focuses on developing interpretability methods to examine neural network behavior in privacy-sensitive contexts. The goal is to understand and improve how large language models handle privacy-related queries and enforce safety boundaries.

Key Research Components:

Dual-Phase Training Methodology: The project employs a sophisticated dual-phase fine-tuning approach consisting of a database learning phase followed by refusal training. This methodology allows us to study the emergence of safety behaviors in language models and understand how models learn to refuse inappropriate requests while maintaining utility for legitimate queries.

Synthetic Database Training: We train models on synthetic database mappings to create controlled environments for studying privacy-sensitive behavior. This approach enables systematic analysis of how models internalize and protect private information.

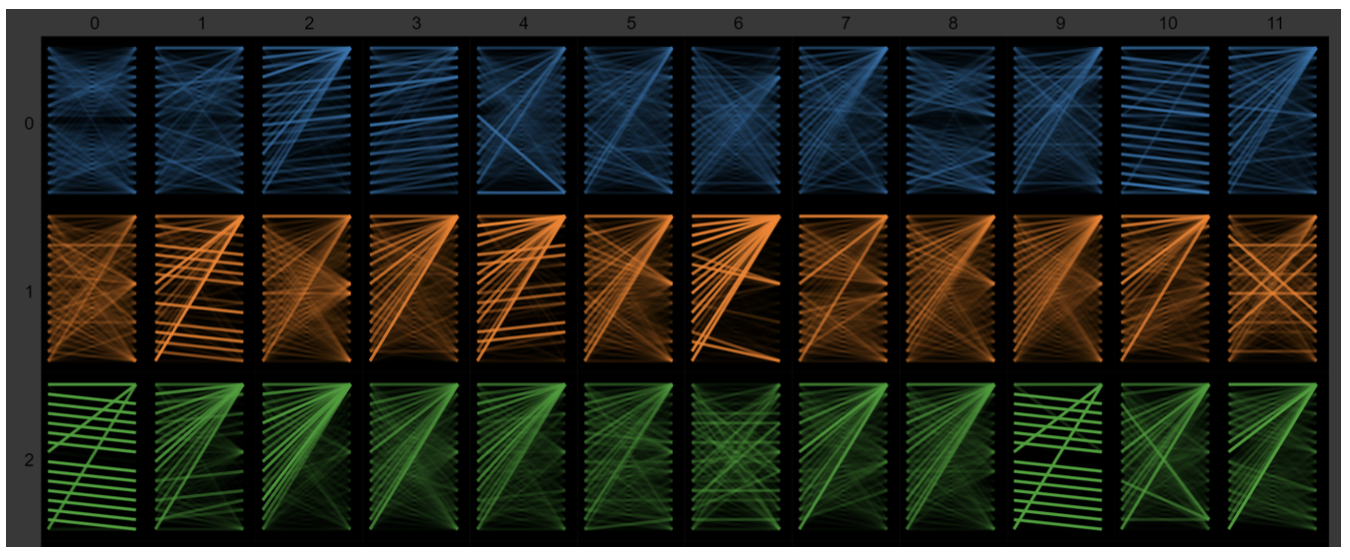

Refusal Mechanism Analysis: Through careful examination of model internals, we investigate the mechanisms by which language models develop and execute refusal behaviors. This includes analyzing attention patterns, activation distributions, and decision boundaries that distinguish between appropriate and inappropriate requests.

Probing Strategies: We implement advanced probing techniques to investigate internal representations and decision-making processes within the neural network. These strategies help us understand what features the model uses to identify privacy-sensitive contexts and how it generates appropriate refusals.

LIME for Model Explanation: Local Interpretable Model-agnostic Explanations (LIME) are employed to provide post-hoc explanations of model decisions. This helps validate our understanding of the model's internal reasoning and provides transparency into the refusal mechanism.

Research Impact:

This research contributes to the broader goal of making AI systems more transparent, trustworthy, and aligned with human values. By understanding how models learn and apply safety constraints, we can develop better training methodologies and safety mechanisms for future AI systems. The insights gained from this work have implications for AI safety, privacy preservation, and responsible AI development.